Now Reading: Meta Introduces AI Voice Translation for Reels: Expanding Reach with English–Spanish Support

-

01

Meta Introduces AI Voice Translation for Reels: Expanding Reach with English–Spanish Support

Meta Introduces AI Voice Translation for Reels: Expanding Reach with English–Spanish Support

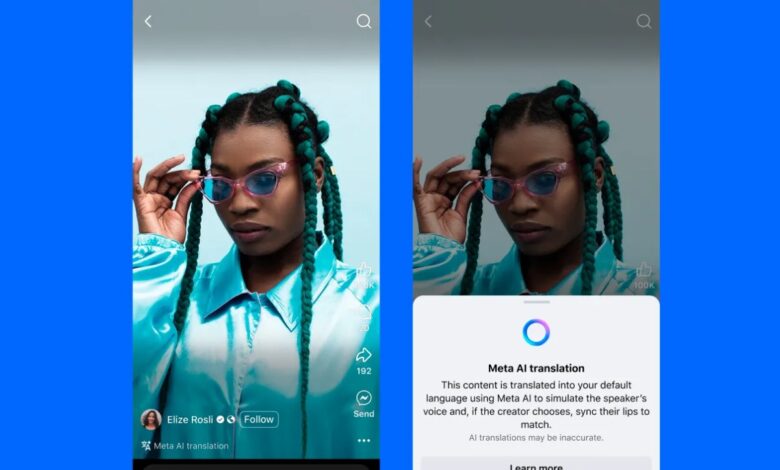

Meta has introduced a new AI-powered voice translation tool for Reels across Facebook and Instagram. Currently supporting English and Spanish, the feature automatically dubs and lip-syncs creators’ video voices, preserving their original tone while making content accessible to broader global audiences. Available to select users now, the platform plans a wider rollout soon.

The rollout of Meta’s AI translations signals a shift toward more inclusive content creation. Reels creators can toggle the translation and lip-sync options before posting. Translated audio appears in the viewer’s preferred language, and users even have the choice to opt out if they prefer the original audio.

** How the Feature Works**

Switch on the “Translate your voice with Meta AI” toggle before publishing your Reel. You can also choose to enable lip-sync matching. Creators receive a notification to review the AI-generated audio before it goes live. Viewers will see that a translation has been applied and can control preferences in settings.

** Why It Matters for Indian Creators**

For creators from Tier-2 cities like Indore, Kochi, or Jaipur, who often produce content in regional languages, this feature offers a chance to reach English and Spanish-speaking audiences without learning new languages or hunting down translators. It can widen viewership and fan engagement at minimal additional effort.

At the same time, creators may worry about losing authenticity. Meta says its AI preserves tone and intent—but creators in smaller cities will watch closely to see if machine-translated voices still capture the personal touch.

** Conclusion**

Meta’s AI dubbing feature is more than a convenience—it’s a bridge across language barriers, potentially transforming how creators share stories globally. For India’s rising-generation content makers, especially outside metro hubs, this could be a game-changer in building a global footprint with local voices